In reality, I am not a long-haired Asian woman, and when I create virtual avatars of myself, they’re never long-haired, Asian, or female. Yet at this week’s Siggraph 2019 conference in Los Angeles, FaceUnity’s “automatic” cloud AI avatar generator snapped a photo of me and quickly came to some very incorrect conclusions. “It thinks you’re a lady,” one of the booth attendants said, slightly smiling. I walked away from the demo bemused, thinking about how Apple will eventually add the same AI-powered feature to iOS’s Animoji/Memoji generator, but will make it work properly.

This, in a nutshell, is the 2019 state of AI in computer graphics. Lots of developers are finding ways to incorporate AI and machine learning technologies into projects that will be mainstream within the next five years, but you often have to look beyond their currently mixed results — and their least performant examples — to appreciate how important they’ll soon become.

AI as a tool for facial and motion capture technologies

At the same time as FaceUnity’s technology was failing to virtualize a short-haired Caucasian male, multiple Siggraph exhibitors were showing off real-time motion capture tools capable of dynamically monitoring a person’s entire body or just a face, while instantly recreating the movements on photorealistic simulacra. Going in the opposite direction (from digital to analog), exhibitor Lulzbot showed off $2,950 3D printers that can produce wearable human masks indistinguishable from the people they’re based upon — Mission: Impossible technology made real.

Granted, CG pioneers developed virtually perfect digital simulations of skin textures, eyes, and hair many years ago, so the underlying technology isn’t new. But in 2019, the translation of human motion into digital forms is happening in real time, at high resolutions, and with great accuracy on consumer- or near-consumer-grade hardware. With the right CPU and video card, a virtual “you” can look just like the real you, or as plausibly and completely different as you prefer.

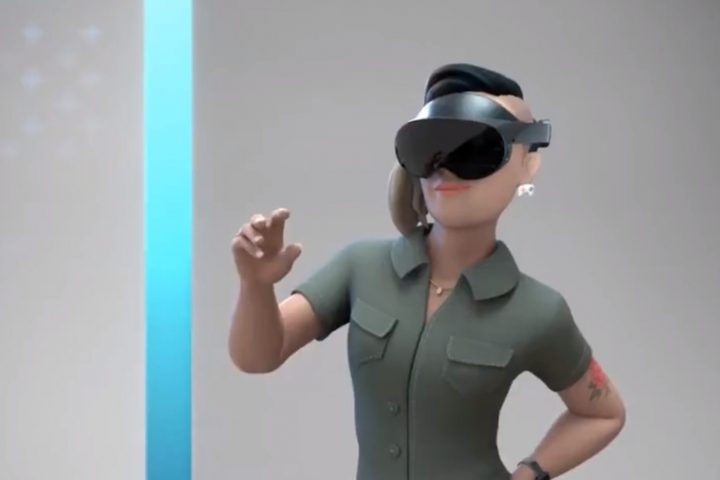

Capture and display solutions are continuing to evolve as well. Intel’s booth was almost entirely focused on showing off voxel-based volumetric captures of live performances from musician and comedian Reggie Watts, which could be displayed in VR using headsets, AR with tablets, or in Looking Glass’ glasses-free 3D screens (above). AI-assisted software combines the 360-degree input from multiple cameras into a single data stream that can be viewed in 3D from multiple angles, or in the case of a separate Looking Glass demo below, rotated using touch gestures at will.

The sophistication and output of these 3D display technologies varies dramatically based on a variety of factors. Intel’s exhibit showed how the concept works with an unthinkably expensive capture rig and a $6,000 Looking Glass Pro screen to create moving images. But in another Siggraph area, an artist used multiple photographs to create fixed-position 3D representations of people hugging, inside snow globe/paperweight-sized transparent housings. Thus two different takes on the same basic concept were separately presented as technology and art.

AI as an artist’s tool, or artist

AI is capable of taking on a more prominent role in creating art. While we went into detail on Nvidia’s GauGAN in a separate article, seeing the digital art-generating technology in person was fairly striking. Released in March, the software enables an artist — even a bad one — to lay out a primitive sketch that’s instantly transformed into a photorealistic landscape via a generative adversarial AI system.

Only months later, GauGAN has already been used to create 500,000 images, and at Siggraph, Nvidia had multiple demo stations set up to let human artists sketch the concept, while the computer does most of the actual artistic work. The basic colors on the left all correlate to elements such as “sky,” “cloud,” “grass,” and “water,” telling the software generally where to place preferred elements before it automatically generates 3D shapes and textures to fill the spaces.

GauGAN’s machine learning underpinnings — a million Flickr images teaching compositional relationships between 180 natural objects — make it a prime example of how AI can influence CG. But Nvidia’s booth was filled with other examples where AI played a more subtle role, such as a demonstration of photorealistic real-time ray tracing with V-Ray, software that uses Nvidia AI to “denoise” (read: automatically clean while preserving detail) images. A walk around the demo stations made clear that just as Adobe’s Photoshop once blurred the lines dividing 2D art from photography, Nvidia’s technologies are presently obliterating the differences between photography and 3D rendering.

AI as a bullet point

Having covered emerging technologies for years, I’ve never liked sifting through pitches where a technology is being used more as a buzzword to attract attention than as a major feature of a new offering. Just like 5G, AI has become a bullet point that creators toss into projects to attract attention, distracting from more substantial — some might say more legitimate — uses elsewhere.

One of the examples from Siggraph 2019 that won’t leave my head is the art exhibit RuShi, which artist John Wong explained “is about prediction, fate, and superstition, questioning what we really need at the age of AI.” I discussed it in my Siggraph preview article, noting that it purports to transform your birth date and birth time into a “flow of colors” that sits alongside other participants’ visualized data on large projection screens. As you stand and look at it, you might ask yourself whether you’re comfortable with “personal data” continuing to float around in some form alongside other participants’ information.

The thing that’s lingered with me long after leaving the exhibit is whether this transformed data really means anything, and really has any AI component at all. It’s not as if someone couldn’t have used four numbers to generate moving lines on a screen 30 years ago, before “the age of AI,” and it wouldn’t have looked much different if at all back then. To be clear, I’m not questioning whether it’s art; I’m just questioning whether AI actually has a role in the art.

Yet that alone says a lot about where AI is as of 2019. In the case of FaceUnity’s avatars, AI may be incompetent or marginally competent at its task, while with RuShi, it might be as capable as an early digital artist was 30 years ago, and at Nvidia, it could be creating art to the standard of a contemporary human renderer. Regardless of the specific application and quality of output, AI is here to stay, and it’s only becoming more impressive over time.

This post by Jeremy Horowitz originally appeared on VentureBeat.

The post At Siggraph 2019, AI-aided CG Flexed Its Muscles And Slightly Stumbled appeared first on UploadVR.

Source: At Siggraph 2019, AI-aided CG Flexed Its Muscles And Slightly Stumbled