A guest post by the Engineering team at

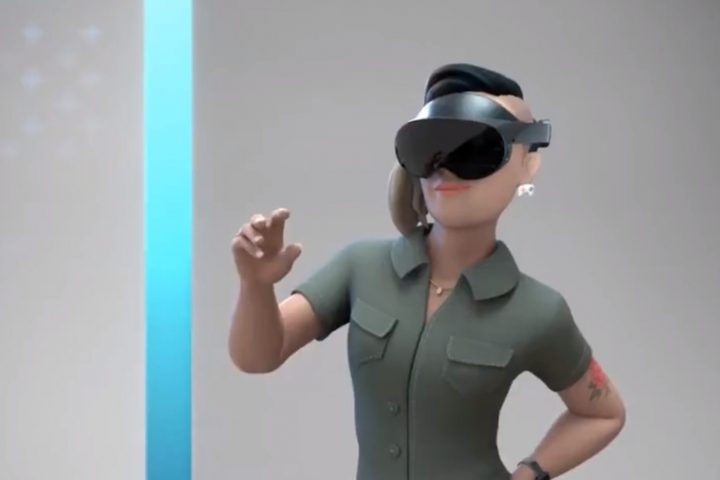

Figure 1: The Video-Touch system in action: single user controls a robot during a Video-Touch call. Note the sensors’ feedback when grasping a tube [

Figure 2: Overall scheme of the Video-Touch system: from users webcam to the robot control module [

Figure 3: Modified MediaPipe Hand Landmark CPU subgraph. Note the HandGestureRecognition calculator

To alleviate the problem of bad generalization, we implemented the second version. We trained the Gradient Boosting classifier from scikit-learn on a manually collected and labeled dataset of 1000 keypoints: 200 per “move”, “angle” and “grab” classes, and 400 for “no gesture” class. By the way, today such a dataset could be easily obtained using the recently released

Figure 4: Gesture classes recognized by our model (“no gesture” class is not shown).

Right after the publication, we came up with a fully-connected neural network trained in Keras on just the same dataset as the Gradient Boosting model, and it gave an even better result of 93%. We converted this model to the TensorFlow Lite format, and now we are able to run the gesture recognition ML model right inside the Hand Gesture Recognition calculator.

Figure 5: Fully-connected network for gesture recognition converted to TFLite model format.

When we get the current hand location and current gesture class, we need to pass it to the Robot control module. We do this with the help of the high-performance asynchronous messaging library

Figure 6: Hand-robot control logic follows the idea of a joystick with pre-defined movement directions [

Figure 7: High fidelity tactile sensor array: a) Array placement on the gripper. b) Sensor data when the gripper takes a pipette. c) Sensor data when the gripper takes a tube [

Figure 8: Multi-user robotic arm control feature. The users are able to perform a COVID-19 test during a regular video call [source video].

Endnotes

Thus by using MediaPipe and a robot we built an effective, multi-user robot teleoperation system. Potential future uses of teleoperation systems include medical testing and experiments in difficult-to-access environments like outer space. Multi-user functionality of the system addresses an actual problem of effective remote collaboration, allowing to work on projects which need manual remote control in a group of several people.

Another nice feature of our pipeline is that one could control the robot using any device with a camera, e.g. a mobile phone. One also could operate another hardware form factor, such as edge devices, mobile robots, or drones instead of a robotic arm. Of course, the current solution has some limitations: latency, the utilization of z-coordinate (depth), and the convenience of the gesture types could be improved. We can’t wait for the updates from the MediaPipe team to try them out, and looking forward to trying new types of the gripper (with fingers), two-hand control, or even a whole-body control (hello, “Real Steel”!).

We hope the post was useful for you and your work. Keep coding and stay healthy. Thank you very much for your attention!

This blog post is curated by Igor Kibalchich, ML Research Product Manager at Google AI

Source: Video-Touch: Multi-User Remote Robot Control in Google Meet call by DNN-based Gesture Recognition